We’ve been upgrading a lot of customers from Sitecore 6.x, as Sitecore 6.x is coming to its end of life maintenance support. As you know, Sitecore 7.5 introduced a new component xDB, running off MongoDB. For the most part, Sitecore development before 7.5 did not include any other database other than Sql Server or Oracle. With the introduction of xDB, getting a MongoDB instance running has become an essential part of the upgrade. If you are unfamiliar with MongoDB, there are various ways to get an instance – Cloud Offering from Sitecore, Cloud offering from a MongoDB cloud provider, or setup your own.

For a myriad of reasons (mostly legal), our situation required us to host our own, so not only did we have to learn how to manage a MongoDB instance, but we had to learn how to design the HW infrastructure as well, considering best practices and redundancy. You can get upto speed on managing the instance on the MongoDB University site, as well documentation on designing a production MongoDB cluster. In my last post, we went through the conceptual implications of setting this up and how to set it up locally for development. After going through all the documentation, this is the final production cluster infrastructure we decided to use.

Overall Architecture

So many servers! What do they all do?

It is a daunting amount of servers (trust me, the budgeting people were not happy). I must preface with the explanation that this environment is meant to support close to 60 Sitecore instances with a lot of traffic and be on hand to expect an additional 100 more instances, so we wanted something that would scale well. With virtual machines, the individual cost of machines are much less than previous years, and MongoDB is fine to run on VMs – for best performance, SSDs (Solid State Drives) are preferable, and we decided to go that route. In essence, the SSD are the most expensive component of this infrastructure.

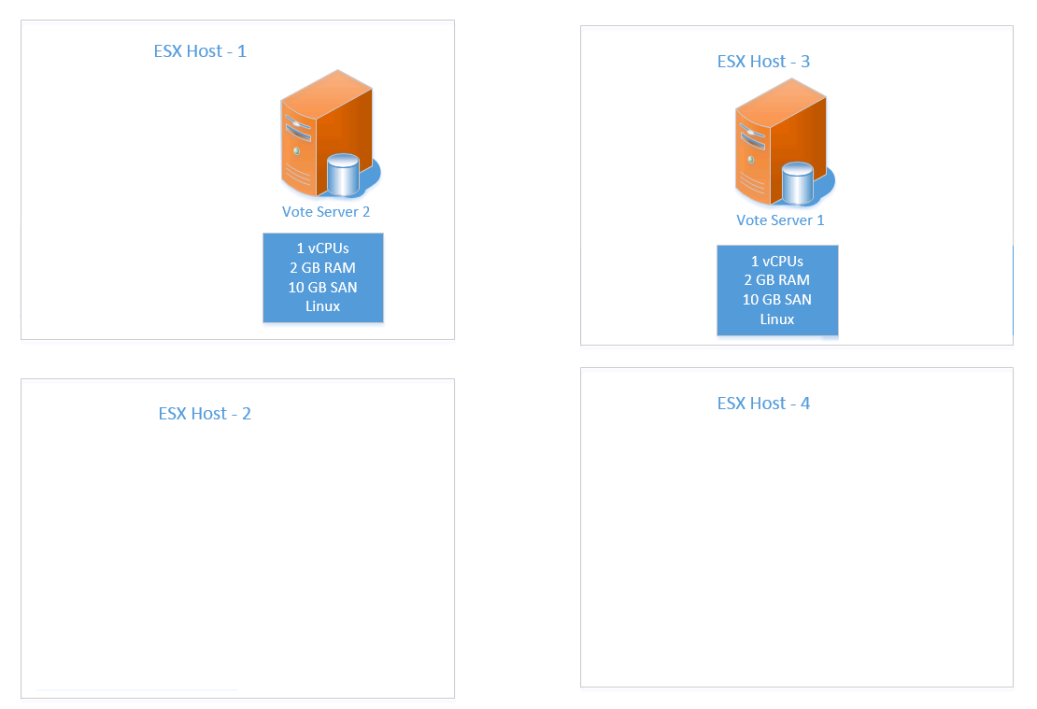

Overall, we decided to spread this out over 4 different VM hosts, and make sure to never put a fallback VM on the same host. This ensures that if the host dies, the fallback VM doesn’t get affected. At the same time, if the host with the fallback VM dies, it doesn’t take the primary VM with it. We make sure when we spread out all the VMs, we don’t put a primary and secondary on the same host.

Query Routers

Query Routers are the front-end connection points the application. Essentially, in the Sitecore connectionStrings.config, these are the servers that you would connect to. It is also called a ‘mongos’ instance. These servers don’t hold the actual data, and in a development environment, the mongos behaves exactly like the mongo instance – for that reason, the specs on the server are fairly average. Routers can be both primary/secondary (i.e. primary falls back to secondary) or they could be balanced (i.e. a set of applications connect to one router, while another set connects to another). In our case, since we have almost 60 sites, we just split the load. But if one fails, we can always switch to use the other router.

Note: Notice we didn’t designate which host the routers go on – that’s because we actually had another host that could contain the router VMs. If you are building from scratch, consider that you will need a host for these VMs, too – unless you can overlap. See below for ‘some considerations for cost’.

Config Servers

The config servers are special ‘mongod’ instances that have metadata information about where sharded cluster the data lives. Also usually a light load, the specs on these servers are also average. For redundancy, there should be more than one config server – however, in a production cluster, there should be at least 3. A practical reason for this is because the MongoDB configuration supports 1 or 3 config servers. Config servers are updated using a two-phase commit, which needs consensus from multiple servers, so in a 2 server scenario, you ‘could’ potentially have a failing situation.

Shards

These are the base ‘mongod’ instances that hold the data. For redundancy, a single shard should be replicated – so thats two servers. And for good data distribution, there should be at least 2 shards, so that notches it up to 4 servers. You can always starts with 1 shard, but then adding a second shard later can become time-consuming, since there is an element of data re-distribution. Also, if you are also adding a single shard, there is no real need for config servers, in which case if you start with only 1 shard and want to add a second one later, you will need to add the config servers. For ease of future scalability, we decided to start with 2 shards so adding shards later will not be a long process. These servers are the guts of the cluster, and have a pretty good specs – high RAM, lots of HD space.

Vote Servers

The vote servers are responsible for choosing which server in a replica set becomes primary when a single set in a replica set dies. Usually the minimum number of servers in 1 replica set is 2 servers, primary and secondary – when the primary dies, the secondary becomes readonly. Then there is an election to decide which set member then becomes primary. For that reason, the number of vote servers plus the remaining members of the replica set must be odd, so there is always a clear winner. It’s really redundant in our case, because we only have 2 members in each replica set, but again, for future scalability, we decided to add it. These are very light servers and so can have very light specs. If we add another member to our replica set, we will need to add another vote server role.

Some Considerations for Costs

No doubt this setup that we have here costs a lot. This is meant to be an enterprise level setup, with lots of room for growth. It may be overkill on some levels, but if you have sites that rely heavily on xDB, the MongoDB instance becomes a critical/single point of failure, and we can’t have that. However, for needs that are not so critical, some redundancy may be removed, and the distribution of VMs can be alleviated.

- A lot of these roles can have overlap. For example, configs and vote servers can be on the same server, and thereby eliminate the need to have the vote servers.

- You can also theoretically also run the config servers and router instances on the same servers, but that would reduce redundancy a little bit.

- Depending on how important xDB is to your Sitecore instance, you also don’t necessarily need to have redundancy at every layer.

- As mentioned, SSDs are expensive. Depending on the traffic of your application, you could possibly opt to not get SSD.

- Because we had room available on other hosts, we didn’t have to get another host for the routers – however, these can be overlapped as well, as the other hosts have PLENTY of room to add more VMs.

Conclusion

If you’re able to get a cloud offering to host your xDB, I think it is a good choice, since it is one element that you don’t have to manage on your own. But if you have to roll your own, I hope this is a good guide to making decisions as to what hardware to get to comfortably support your Sitecore instance for growth. This is in the process of being implemented, and I intend to write another post once it gets going and report my findings. Stay Tuned!

Very informative article thank you for sharing.

We’re in the early planning stages of migrating to Sitecore 8 and will be self hosting MongoDb for our production Sitecore environment (for data privacy reasons) and this will be eye opening to the team.

Great to hear!